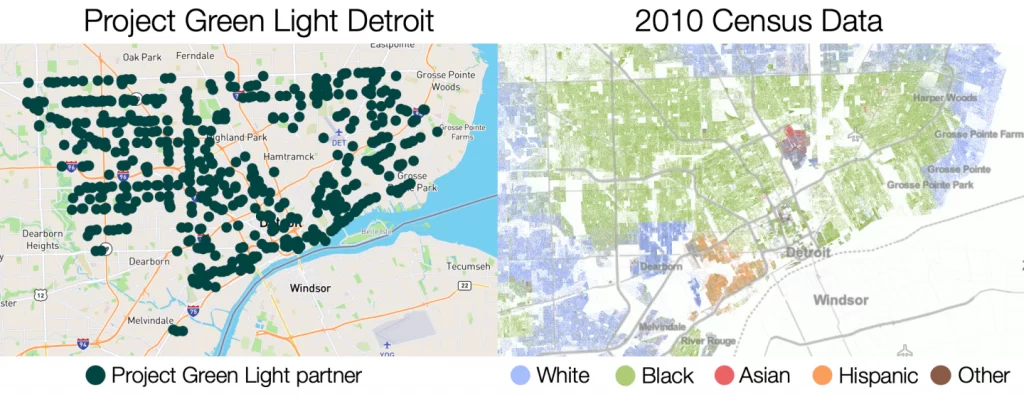

Unbeknownst to many Americans, many urban law enforcement agencies have begun to implement facial recognition surveillance systems that disproportionately target Black citizens. In 2016, for example, a unique surveillance program, known as “Project Green Light” was enacted in Detroit, Michigan, a predominantly Black city. A network of high-definition cameras was installed throughout the city that can utilize facial recognition software to identify people as a way to deter crime (Najibi, 2020). While this idea may appear to be good in theory, it poses several serious problems relating back to racial discrimination. A disproportionate amount of cameras are located in Black neighborhoods in comparison to white or Asian communities in the city (Najibi, 2020). This raises several questions about the city’s underlying goals in establishing this surveillance system and the way they are using it in policing. While there is no question of the presence of racial discrimination in law enforcement (Alexander, 2020), with Project Green Light a new era of this begins, with a surveillance network predisposed to target Black citizens.

Technology of Terror: Misidentification by Facial Recognition Technologies

Facial recognition software is less accurate at identifying people of color. Facial detection and recognition technologies (FDRTs) are riddled with biases that trace back to the databases that FDRTs are built upon. One of the most common facial recognition datasets was estimated to be about 75% male and more than 80% white (Lohr, 2018). In a 2018 study, it was found that the three major face recognition systems, Microsoft, IBM, and Megvii of China, all lacked the ability to successfully ascertain the gender of people with darker skin tones. 35% of darker skinned women were misidentified (Buolamwini, 2018). The fact that facial recognition is less accurate for people of color is a problem in and of itself, but the way that this software is being used in law enforcement in neighborhoods occupied by people of color at a higher rate is even more daunting.

In Detroit, there have been several cases of misidentification of Black men that have led to wrongful arrests. In 2020, Robert Julian-Borchak Williams was aggressively arrested for a robbery after being identified by facial recognition software. Once in custody, it was clear he was not the man in the security footage; yet he was still held for 30 hours and faced an arraignment date that was eventually dropped (Leslie, 2020). Michael Oliver was similarly misidentified by the facial recognition software and imprisoned for two and half days, even though his facial shape, skin tone, and tattoos were all inconsistent with the video of the real suspect (Leslie, 2020).

This technology further contributes to a long history of racial discrimination in law enforcement, as well as a history of apathetic responses from both the political and legal systems (Alexander, 2020). The inequity of the facial recognition software is well known (Harmon, 2019), and yet, both Williams and Oliver still experienced a traumatizing violation of their own civil liberties at the hands of it. There seems to be a disregard for this discriminatory impact as the surveillance system continues to expand at the orders of government and law enforcement leaders in the city, with taxpayers’ dollars funding it (Kaye, 2021).

What can be done?

One of two things must be done to rectify this discriminatory practice: either this technology must be improved to accurately recognize Black citizens and the cameras must be equally distributed throughout all neighborhoods in Detroit; or the program must be disbanded, and law enforcement must halt usage of facial recognition software in their work.

Works Cited

Alexander, Michelle. 2020. The New Jim Crow. The New Press.

Buolamwini, Joy. 2018. “Gender Shades: Intersectional Accuracy Disparities in Commercial

Gender Classification.” Proceedings of Machine Learning Research 81:1–15.

Harmon, Amy. 2019. “As Cameras Track Detroit’s Residents, a Debate Ensues Over Racial

Bias.” The New York Times, July 8.

Kaye, Kate. 2021. “Privacy Concerns Still Loom over Detroit’s Project Green Light.” Smart Cities

Dive. Retrieved March 7, 2022 (https://www.smartcitiesdive.com/news/privacy-concerns-still-loom-over-detroits-project-green-light/594230/).

Leslie, David. 2020. “Understanding Bias in Facial Recognition Technologies.” Understanding

bias in facial recognition technologies: an explainer. The Alan Turing Institute. Retrieved

February 23, 2022 (https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3705658).

Lohr, Steve. 2018. “Facial Recognition Is Accurate, If You’re a White Guy.” The New York

Times, February 9.

Najibi, Alex. 2020. “Racial Discrimination in Face Recognition Technology.” Science in the

News. Retrieved February 23, 2022 (https://sitn.hms.harvard.edu/flash/2020/racial-

discrimination-in-face-recognition-technology/).

Stevens, Nikki, and Os Keyes. 2021. “Seeing Infrastructure: Race, Facial Recognition and the

Politics of Data.” Cultural Studies 35(4-5):833-853 (https://www.proquest.com/scholarly-

journals/seeing-infrastructure-race-facial-recognition/docview/2564467157/se-

2?accountid=11264). doi: http://dx.doi.org/10.1080/09502386.2021.1895252.